The following blog is adapted from the book Industrial Cybersecurity Case Studies and Best Practices, authored by Steve Mustard. More excerpts will be following in the coming weeks. See Excerpt #1 here. See Excerpt #2 here. See Excerpt #3 here.

NEW: See Steve Mustard's October 2022 appearance on NasdaqTV here.

What We Can Learn from the Safety Culture

Visit any OT facility today and you will likely find several obvious cybersecurity policy violations or bad practices. Typical examples include the following:

- Poor physical security, such as unlocked equipment rooms or keys permanently left in equipment cabinet doors.

- Uncontrolled removable media used to transfer data.

- Poor electronic security, such as leaving user credentials visible. See Figure 1 for a real example of this bad practice.

Even in regulated industries, compliance with cybersecurity regulations is, at the time of this writing, not where it should be. In 2019, Duke Energy was fined $10 million by the North American Electric Reliability Corporation (NERC) for 127 violations of the NERC Critical Infrastructure Protection (CIP) regulations between 2015 and 2018.[1] Although this was an unusually large fine (consistent with a major regulatory violation), NERC continues to fine companies that fail to follow its cybersecurity regulations.

Figure 1. Control Room Console with User Credentials Visible on a Permanent Label.

Figure 1. Control Room Console with User Credentials Visible on a Permanent Label.

Human Error

Accidental acts are a significant contributor to the totality of cybersecurity incidents today and have been since record keeping began. Surveys from IBM, Verizon, and others consistently show that the majority of cybersecurity incidents are caused by human error.

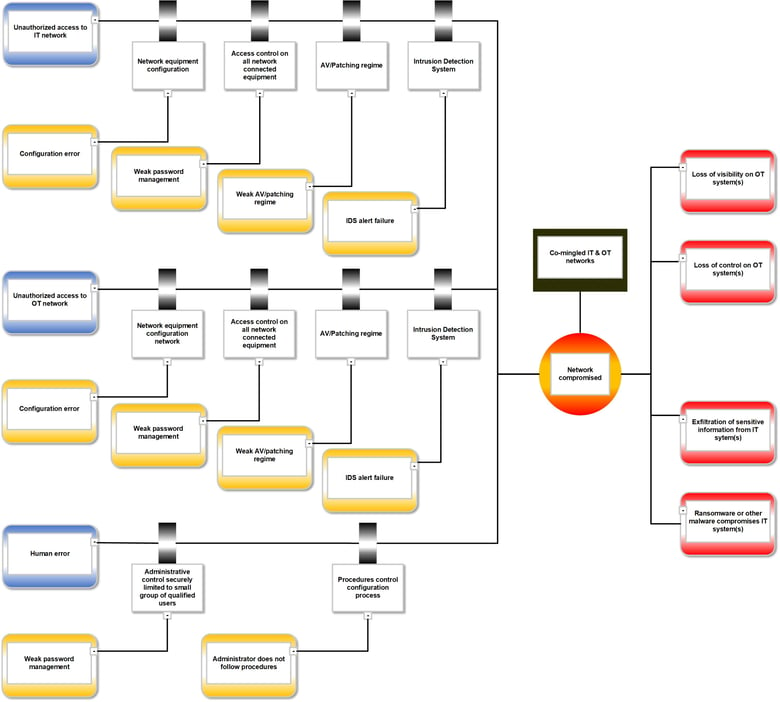

Figure 2 shows a bowtie diagram representing the potential threats to an organization with a co-mingled IT and OT network, and include significant contributions from human error. These threats could result in a loss of visibility or control on OT systems, as well as exfiltration of sensitive information from IT systems or ransomware compromise of these systems.

Figure 2. Bowtie diagram showing potential for human error.

Figure 2. Bowtie diagram showing potential for human error.

The bowtie diagram shows that the mitigations for the human error threat are heavily dependent on administrative controls. These include a well-managed access control process that limits elevated access to a small group of competent users, and rigorous procedures for tasks performed by users with elevated access.

James Reason, professor emeritus of psychology at the University of Manchester, is the creator of the Swiss cheese model of accident causation. He believes an effective safety culture requires a constant high level of respect for anything that might defeat safety systems. He says the key is “not forgetting to be afraid.” Well-designed systems with multiple layers of protection are designed to ensure that no single failure will lead to an accident. Such systems result in an “absence of sufficient accidents to steer by.” This eats away at the desired state of “intelligent and respectful wariness.”[2]

Reason says organizations should not think they are safe because there is no information to say otherwise. This mind-set leads to less concern about poor work practices or conditions. It may even reduce unease about identified deficiencies in layers of protection. The same thinking should be applied to cybersecurity. As noted earlier in this chapter, underestimation of cybersecurity risk and overconfidence in the layers of protection lead to a lack of concern. Left unchecked, this lack of concern can be expressed in the acceptance of bad practices such as use of unapproved removable media, leaving cabinets unlocked, leaving controllers open to remote programming, and poor account management.

Training and Competency

Organizations approach cybersecurity training in a variety of ways. Some create their own material, and some use specialist training providers. There are advantages to each approach. Custom material can be made specific to an organization. It may be more relevant, but the material may lack the deep insights and broader experience of a specialist provider. A good compromise is to use a specialist training provider to customize material for the organization.

No matter how the training is created and provided, it must cover all the required skills and knowledge.

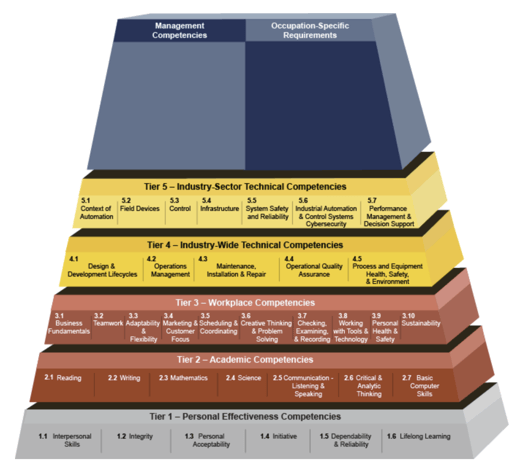

A great deal of excellent guidance is available for organizations to use in developing their training programs. The US Department of Labor’s Automation Competency Model (ACM), created in 2008, has been updated regularly, using subject matter experts from the International Society of Automation (ISA) and elsewhere.[3] The ACM overview is shown in Figure 3. Each numbered block is a specific area of competency. Block 5.6 covers industrial automation and control systems cybersecurity. The specific skills and knowledge for this area of competency are described in the accompanying documentation.

Figure 3. The US Department of Labor ACM.

Figure 3. The US Department of Labor ACM.

The blocks in the ACM should not be considered in isolation. Being effective in automation systems cybersecurity requires the skills and knowledge defined in other blocks in the ACM. Furthermore, most organizations have a mixture of IT and OT personnel who have some role to play in automation systems cybersecurity. Each role will need a minimum standard of competency that covers a variety of areas.

Continuous Evaluation

Training and competency are not one-time exercises. Personnel need continuous learning to ensure they are aware of changes to policies and procedures, risks, and mitigating controls. In addition, there must be a system of monitoring to ensure that training is effective.

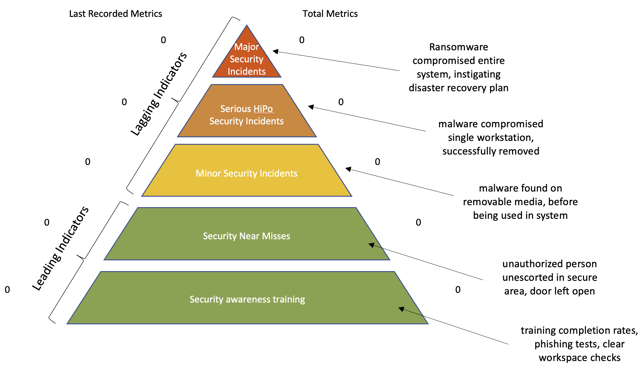

In health and safety management, lagging and leading indicators are critically important. The relationship between leading and lagging indicators for health and safety in the form of the accident triangle, also known as Heinrich’s triangle or Bird’s triangle. The accident triangle is widely used in industries with health and safety risks. It is often adapted to include regional or organization-specific terms (e.g., HiPo or High Potential Accident instead of Serious Accidents) or to provide further categorization (e.g., showing Days Away from Work Cases and Recordable Injuries as types of Minor Accidents).

The accident triangle clearly shows the relationship between the earlier, more minor incidents and the later, more serious ones. This relationship can also be expressed in terms of leading and lagging indicators. In the accident triangle, unsafe acts and near misses are leading indicators of health and safety issues. As these numbers increase, so does the likelihood of more serious incidents. These more serious incidents are the lagging indicators. To minimize serious health and safety incidents, organizations monitor leading indicators such as unsafe acts or near misses.

The same approach can be adopted form cybersecurity monitoring. Monitoring near misses and performing regular audits verify that employees are following their training and procedures. This creates leading indicators that can be adjusted so that the resulting lagging indicators are within expectations. For example, if employees have training outstanding, they can be prompted to complete this. Observations and audits will show if training must be adjusted. An example cybersecurity triangle, based on the accident triangle, is shown in Figure 4.

Figure 4. Monitoring Cybersecurity Performance.

Figure 4. Monitoring Cybersecurity Performance.

Summary

Cybersecurity is constantly in the news, so it may seem reasonable to believe that people have a good awareness of the cybersecurity risks their organizations face. However, evidence indicates otherwise as incidents continue to occur. This trend is primarily driven by people failing to enforce good cybersecurity management practices. Even in regulated industries, organizations still fail to meet cybersecurity management requirements. This is largely due to personnel not following procedures, and a lack of oversight and enforcement by management.

Human behavior must be understood if organizations are to provide good awareness training for their employees. Additional controls can be deployed to minimize the consequences of such mistakes, but effectiveness varies. Some administrative controls can be circumvented if employees are pressured into acting quickly or have limited resources. To overcome this issue, organizations must offer the necessary time and resources for employees to perform their roles. Organizations must provide the right tools to allow them to do this securely and safely, while still being efficient. This situation is analogous to the organizational safety culture.

Cybersecurity training in the OT environment should not be limited to IT security concepts only. Aspects such as OT architectures, safety, physical security, standards, operations, risk management, and emergency response are essential for everyone. This includes workers from IT and OT backgrounds who are involved with cybersecurity management in the OT environment.

Along with awareness training, it is essential that organizations identify and monitor leading and lagging indicators. Again, the safety culture helps by identifying such metrics as near misses, HiPo incidents, and credible occurrence likelihood. These metrics can be readily converted to cybersecurity equivalents.

[1] See https://www.nerc.com/pa/comp/CE/Pages/Actions_2019/Enforcement-Actions-2019.aspx for details.

[2] James Reason, “Achieving a Safe Culture: Theory and Practice,” Work & Stress: An International Journal of Work, Health and Organisations 12, no. 3 (1998): 302, accessed June 21, 2021, https://www.tandfonline.com/doi/abs/10.1080/02678379808256868.

[3] Career Onestop Competency Model Clearing House, “Automation Competency Model,” accessed June 21, 2021, https://www.careeronestop.org/competencymodel/competency-models/automation.aspx.