So Far, Secure Coding Practices Have Been for IT Software Only. That Needs to Change.

Can we start using particularities of Programmable Logic Controllers (PLCs) as features, not bugs for security?

Not a bug, but a feature for secure programming: often, programmers can physically see what their code does. If the PLC code is off, chances are a gauge somewhere does strange things. Photo by Emma Steinhobel

Not a bug, but a feature for secure programming: often, programmers can physically see what their code does. If the PLC code is off, chances are a gauge somewhere does strange things. Photo by Emma Steinhobel

PLCs don’t need secure programming practices that urgently, right? Even if they would—aren’t PLCs incapable of implementing the secure coding practices we know anyway? While we’re at it: Does PLC programming count as programming in the first place?

“No one learns secure PLC coding at school,”Jake Brodsky said in his recent S4x20 talk. This talk gave the initial spark to the Secure PLC Programming Practices Project that Jake Brodsky, Dale Peterson, and I are now leading.

It’s hosted by the ISA Global Cybersecurity Alliance and open to the public since last week.

This article gives an introduction to the project and its background.

Does PLC Programming Even Count as Programming?

I can’t count how often I’ve found myself in this discussion. You just have to skim through ISO/IEC 27001 security controls with an OT team. Inevitably, you come across requirements for secure development.

“Do you develop anything yourselves?”

— “Nope.”

“No software development? Coding?”

— “No, nothing. Well, we program PLCs. Does that count?”

Here we go: does PLC programming count as programming?

Usually, the answer contains something like “well, sort of, but…” Well, sort of, but it is different from “normal” programming. It does not use any conventional programming language, for instance.

This sounds like nitpicking given that the PLC, short for Programmable Logic Controller, even has the word “programmable” as a main feature in its name. But we have to pay tribute to where that “programmable” came from, which is even more striking looking at German wording: The German acronym for “PLC” is “SPS,” which roughly translates to “memory-programmable controller.” Memory-programmable sounds like a strange feature, because where else would you program?

In fact, the term once made a lot more sense. Before the PLC (“SPS”), there was the “VPS” (roughly: relay logic), which translates to “wiring-programmed controller.” So there was indeed another way to “program” a PLC than by writing code into memory: by wiring. The PLC’s logic would be literally hard-wired by connecting electrical relays in a particular configuration, and PLCs are still programmed with that logic in mind.

One of the “fathers” of modern PLCs, Richard E. Morley of Modicon, allegedly even objected against calling the PLC a “computer” because he feared this would decrease acceptance of these new PLCs among control engineers.

This small history lesson still goes a long way in explaining why “well, sort of” is still an accurate answer to the question if PLC programming counts as programming. Technically it does, but then again, it is wildly different from what we now understand as programming.

“Normal” programming and PLC programming are like twins separated at birth, having been raised separately and thus become completely different grown-ups today. We can’t assume that what works for the one twin will work for the other just as well.

“Normal” programming grew up in a heavily networked world, so security became an issue early on. This is why in “normal” software engineering, secure coding practices belong to the basics every software engineer or computer scientist learns at school, just like learning a programming language, efficient use of hardware resources, or useful documentation.

PLC programming, on the other hand, had different priorities. PLCs, above all, need to reliably guarantee real-time operations. Until a couple of years ago, they mostly weren’t connected, at least not network-connected, to anything other than to their I/O, some neighboring PLCs, and maybe a control system.

This is not the article to emphasize differences between IT and OT (see this one instead). For now, let’s just keep in mind that, while normal programs mainly move around data that you can’t see or touch (hence often represented by the notorious “bits and bites” or “ones and zeros”), PLC programs move around very tangible things like valves, gates, or fluids.

We can’t just assume that what helps ensure normal programs’ security works just the same for PLC programs.

Easy Excuses for Not Applying Secure Coding to PLCs

So secure coding for PLCs are not very popular so far, probably due to a variety of reasons. One is that it is easy to dismiss the idea based on two frequently cited false assumptions:

False Assumption: PLCs Don’t Need Secure Coding Practices

Take input validation, one of the most basic secure coding principles: input validation is important to prevent undefined program states and to prevent users from injecting executable code. A PLC’s inputs come from a physical process, so they naturally can only be within a certain range, right?

Of course, this assumption stops working if you begin taking malevolence into account. Also, not all inputs are sensor values: how about timers, counters, and setpoints?

False Assumption: PLCs Are Not Capable of Implementing Secure Coding Practices

The established secure coding practices were never written with PLCs in mind. So some of them are in fact not easily transferable to PLCs. Take integrity checks: in IT systems, integrity checks are often done using cryptographic mechanisms like hashes, so there’s an easy excuse for not checking integrity in PLCs: “The PLC does not have enough resources for cryptographic algorithms.”

Even if that’s true: that does not mean it has no means of checking integrity.

Regarding PLC Particularities as Features, Not Bugs for Secure Coding

In fact, like often in OT security engineering, the crucial step is to stop looking at OT’s differences from IT as bugs and start regarding them as features, making use of them instead of trying to get around them when creating secure practices. In fact, the same security requirements that are important for most IT components are important to PLCs—what differs is the way they are implemented.

Let’s linger at the security requirement of checking code and data integrity: it may indeed not the best idea to use checksums or hashes for integrity checking on a PLC because it adds too much overhead.

But you can use some very PLC-specific features for pointing you to an integrity violation instead:

-

They control a physical process

- They are deterministic (i.e., always deliver the same output given a particular input)

These two very basic PLC particularities already provide you with a surprisingly wide range of options for secure PLC coding.

Examples?

First, determinism. Since PLCs operate with a deterministic scanning cycle crucial for guaranteeing real-time, cycle times should be very constant over time. If you notice an abrupt change in cycle time, this is an indicator that something has changed: either PLC logic, network environment, or controlled process.

So our current #13 Secure PLC Coding Practice targets exactly that:

13. Summarize PLC cycle times and trend them on the HMI

Just like determinism, the fact that PLCs control a physical process can be used to security’s advantage. Knowledge about the physical process can serve as a means for input validation you would not have on a “normal” computer just processing data: for example, you can validate inputs based on how long an operation would physically take. If the gate on a dam physically takes one minute to go from fully closed to fully open, but suddenly completes the task in five seconds, what does this tell you?

So our current #1 and #4 Secure PLC Coding Practices are:

1. Instrument for plausibility checks

4. Validate inputs based on physical plausibility

There is another, maybe unexpected, Secure PLC Coding Practice that combines PLC particularities, physical process control, and determinism: I/O control power usage can be used for physical integrity checks.

How?

Well, if you sum up all 4–20 mA current loops taking into account the states they’re at and the number of digital I/O endpoints—both of which are known by the PLC—you should get an accurate representation of overall I/O control power usage.

If overall I/O power usage is different from the I/O loops sum, this could be an indicator for some new field device being connected to your PLC or for rewiring.

This can be found at #16 in our current Secure PLC Coding Practices list:

16. Sum up control power usage for all I/Os in the PLC

How the Project Is Organized: It’s Not Only About Security

Now that you have an idea of our existing Top 20 list content and its background, I’ll quickly explain how the project is currently organized.

Currently, we have a Top 17 list of Secure PLC Coding Practices, and about thirty more candidates for practices that could make it to the list when more mature.

Each practice on the list has a title, a short description, and a three-digit unique ID. If you click on the ID, you land on a page with more detailed information. Next to title and short description, these details can be:

- Guidance for implementation hints or background knowledge

- Examples for how the practice could be implemented or what kind of real-life issues it could prevent

- Why? Pinpointing the rationale behind the practice

To me, the “why” is the most important—not only because it explains the motivation behind every practice, thus hopefully improving acceptance of the list—but also because it busts the myth of security and usability always being trade-offs.

All current practices naturally have security benefits, but most have other benefits as well. Where applicable, we list benefits for:

- Security

- Reliability/resilience

- Adaptability/agility/maintainability

- Sustainability

Security only costs, but never earns money? At least for PLC security, we may want to question this commonplace statement.

Secure PLC Coding Practices: Two Examples

Enough theory! Here are two example practices in full detail. Just the headline and two-line short description is in the Top 20 list.

Please note these examples are only snapshots—their most recent versions and the entire discussion are on the project platform. As soon as the Top 20 list is mature enough to approach version 1.0, it will be published as a stand-alone publication too.

Example 1: Validate Inputs Based on Physical Plausibility [ID216]

Set a timer for an operation to the duration it should physically take. Consider alerting when there are deviations.

Guidance

If the operation takes longer than expected to go from one extreme to the other, that’s worthy of an alarm.

By the way, if it does it too quickly that is worthy of an alarm too.

Example

The gates on a dam takes a certain time to go from fully closed to fully open.

In a wastewater utility, a wet well takes a certain time to fill.

Why?

Beneficial for…

- Security: deviations can indicate an actuator was already in the middle of a travel state or that someone is trying to fake out the I/O, e.g. by doing a replay attack

- Reliability/resilience: deviations give you an early alert for broken equipment due electrical or mechanical failures

Example 2: Validate Timers and Counters [ID 207]

If outputs and timer values are written to the PLC program, they should be validated by the PLC for reasonableness and backward counts below zero.

Guidance

Timers and counters can technically be preset to any value. Therefore, the valid range to preset a timer or counter to should be restricted.

If you allow remote devices, such as an HMI, to write timer or counter values to a program:

- Do not let the HMI write to the timer or counter directly

- Validate presets and timeout values

Validation or timer and counter ranges is easiest to directly do in the PLC, because unlike any network device capable of Deep Packet Inspection (DPI), the PLC “knows” what the process state or context is. It can validate what it gets and WHEN it gets the commands or setpoints.

Example

For example, during PLC startup, timers and counters are usually preset to certain values.

If you have a timer that triggers alarms at for example 1.3 seconds, and you preset that timer maliciously to 5 minutes, it will never trigger the alarm you’re waiting for.

If you have a counter that causes a process to stop when it reaches 10,000 and you set it to 11,000 from the beginning, the process will never stop.

Why?

Beneficial for…

- Security: if I/O, timers, or presets are written directly to I/O without being validated by the PLC, the PLC validation layer is evaded and the HMI (or other network devices) are assigned an unwarranted level of trust

- Reliability/resilience: the PLC can also validate if you accidentally preset bad timer or counter values

- Adaptability/agility/maintainability: having valid ranges for timers and counters documented and automatically validated may help when changing programs

What’s Next?

The main goal for the next few months will be to improve the practices that are already there and add new ones.

Also, we will think about how we best organize them in order to fit in with the way of thinking of PLC programmers and/or security experts—and maybe add a second layer of organization to help explain core aspects to management.

Later on, it may make sense to include cross-references or even build upon existing work, like:

- Secure coding practices for normal IT (e.g. OWASP, Carnegie Mellon University, CWE, Microsoft)

- PLC coding guidelines like those from PLCopen or IEC 61131 in general (thanks for the hint, Anton!)

- General ICS / OT security standards and frameworks like ICS ATT&CK or ISA/IEC 62443

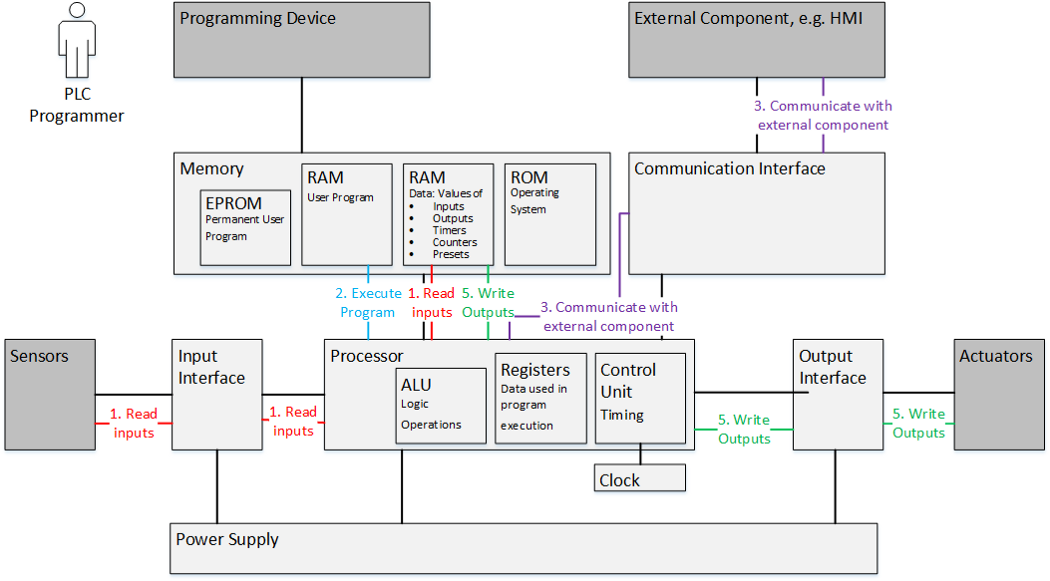

Also, we’re planning on introducing more background information on PLC particularities in order to better explain the practices in general and the “why” in particular. We’re thinking of having PLC models and explanations for different aspects like wiring, hardware, software, and the PLC scanning cycle similar to this:

Model of typical PLC hardware and the scan cycle

Get Involved!

The Top 20 Secure PLC Coding Practices are aimed at becoming a resource that PLC programmers can actually use in their daily work. In order to make that happen, we need input from as many practitioners as possible.

Together, we can make Secure PLC Coding Practices basic commonplace knowledge just like “normal” Secure Coding Practices.

Let’s stop looking at PLC programming as a weird variety of “normal” programming that cannot be done securely. Let’s prove instead that secure programming for PLCs is entirely doable if PLCs’ particularities like determinism and controlling a physical process are understood as features instead of bugs for secure programming.

This article originally appeared on Medium.